The interest in Artificial Intelligence (AI) has surged dramatically with the advent of Large Language Models (LLMs) such as ChatGPT, Gemini (formerly Bard), and LLaMA. These foundational models, trained on petabytes of data, present unprecedented opportunities by providing toolsets for organizations to develop their own AI models. The transformative impact of AI is undeniable, poised to reshape the competitive landscape across major industries. Organizations are now faced with the imperative to swiftly respond and adapt to this paradigm shift. However, acknowledging the immense potential of AI also entails acknowledging its profound responsibilities. Effectively governing AI is paramount to ensuring that its vast capabilities do not result in unintended consequences.

Why now?

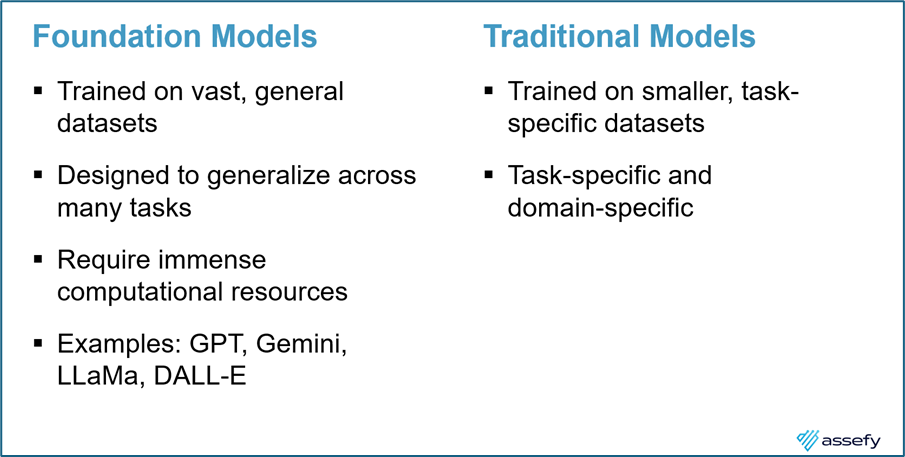

Many organizations have accumulated substantial experience with various AI use cases over the years. Despite a continuous rise in AI investments across diverse sectors, the outcomes have often fallen short of expectations. This can be attributed to the limitations inherent in traditional AI models, which tend to be task-specific and reliant on manually crafted features.

A transformative shift has occurred with the introduction of newly released foundation models—large AI models trained on a diverse range of data. These foundation models exhibit versatility, allowing them to be applied to numerous use cases with minimal additional training. This shift has not only influenced the text generation domain, as seen with ChatGPT and Gemini, but has also extended its impact to image generation, as demonstrated by DALL-E, and code generation, exemplified by GitHub Copilot.

Risk associated with AI

Foundation models exert significant influence on both the environment and human aspects, fundamentally shaping our world. On the environmental front, the impact is marked by high energy consumption, resource depletion, and the generation of electronic waste. Simultaneously, human challenges emerge, spanning economic shifts, issues of bias and fairness, privacy concerns, and security risks. Mitigating these risks calls for a multifaceted approach, combining technical, ethical, and regulatory measures. It is imperative for organizations, researchers, and policymakers to forge collaborative efforts in establishing guidelines and frameworks, thereby ensuring the responsible development and deployment of AI.

Risk addressable by AI governance

Embarking on the realm of AI opens doors to a myriad of possibilities, yet it is crucial to approach it with mindfulness and control. Mishandled AI can result in significant repercussions, including biased models, security vulnerabilities, and substantial fines. Delving into the risks, a robust data and AI governance framework can effectively address:

1. Biased Training Data

Inaccuracies and biases in the training data used for AI models, including foundation models, can result in biased outcomes, leading to unfair and discriminatory predictions.

2. Data Privacy and Security

Improper handling of sensitive data during AI model development and deployment poses a risk to data privacy. Security breaches may lead to unauthorized access and potential misuse of sensitive information.

3. Lack of Data Quality

Poor-quality or incomplete data can negatively impact the performance of AI models, including foundation models, leading to unreliable predictions and decision-making.

4. Data Ownership and Control

Ambiguity around data ownership and control can lead to challenges in managing and sharing data within and outside the organization, affecting the development and deployment of AI models.

5. Regulatory Compliance

Failure to comply with data protection and privacy regulations can result in legal consequences and damage the organization’s reputation.

6. Data Retention and Deletion

Inadequate policies for data retention and deletion can lead to the accumulation of unnecessary data, posing both privacy and security risks.

7. Lack of Transparency

Lack of transparency in how data is collected, processed, and used for AI model training can erode trust among stakeholders and raise ethical concerns.

Effectively addressing these risks requires a comprehensive and proactive approach to data and AI governance, with a focus on transparency, accountability, and adherence to ethical principles. Organizations should continuously assess and update their data and